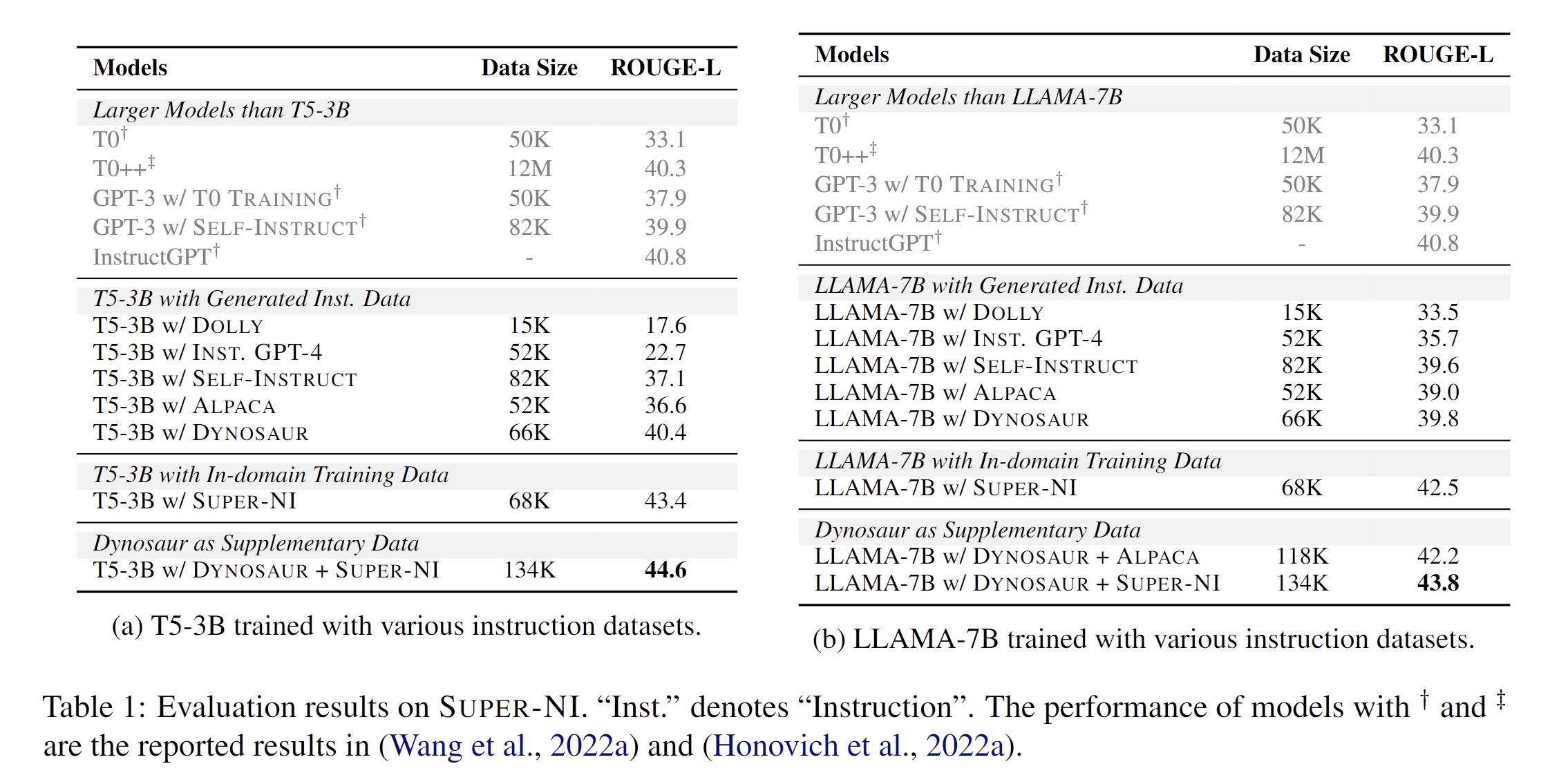

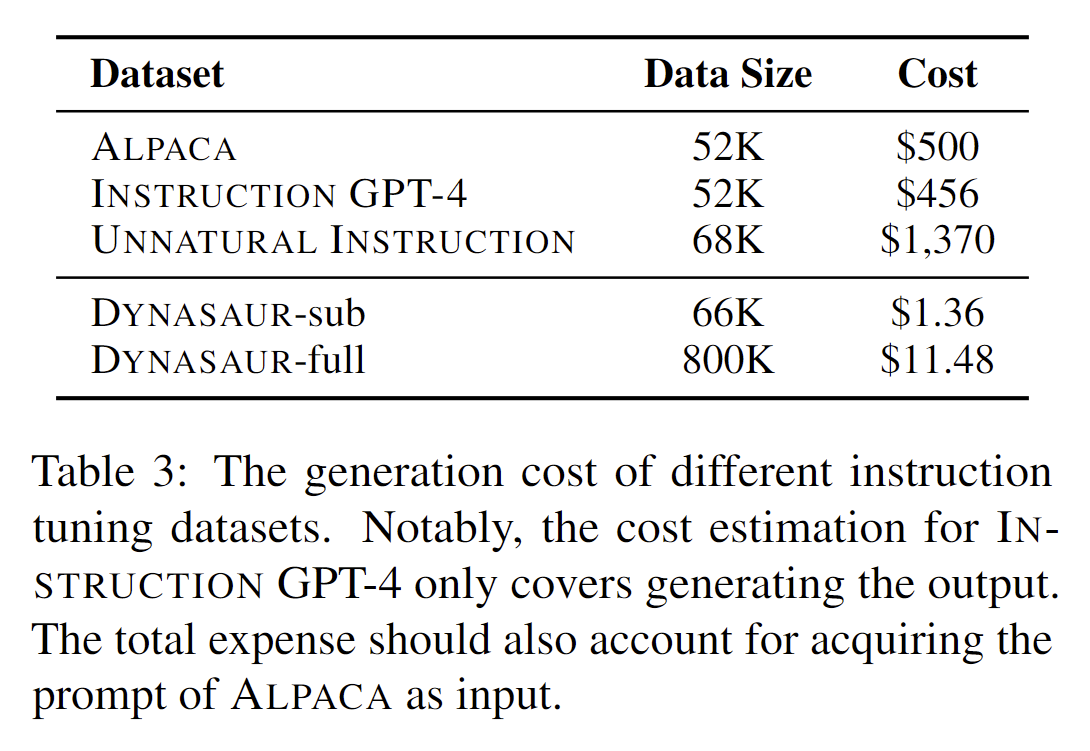

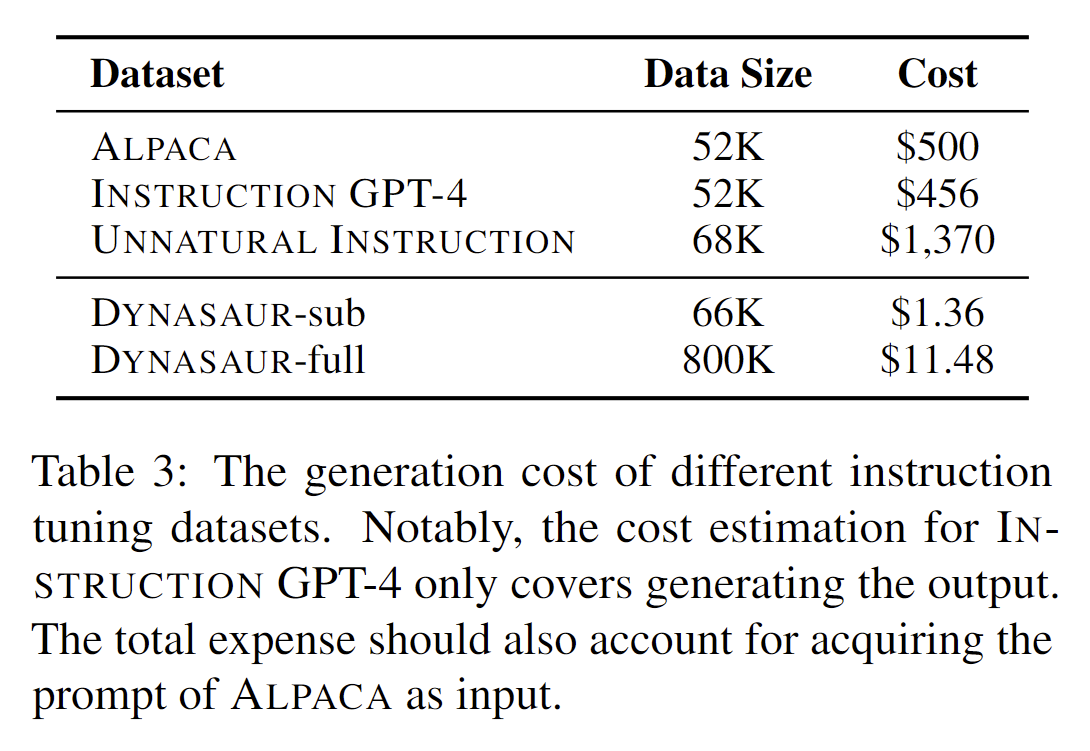

We calculate the cost of generating all the Dynosaur instructions and a subset for Super-NI fine-tuning. Dynosaur can bring better performance with much less generation cost.

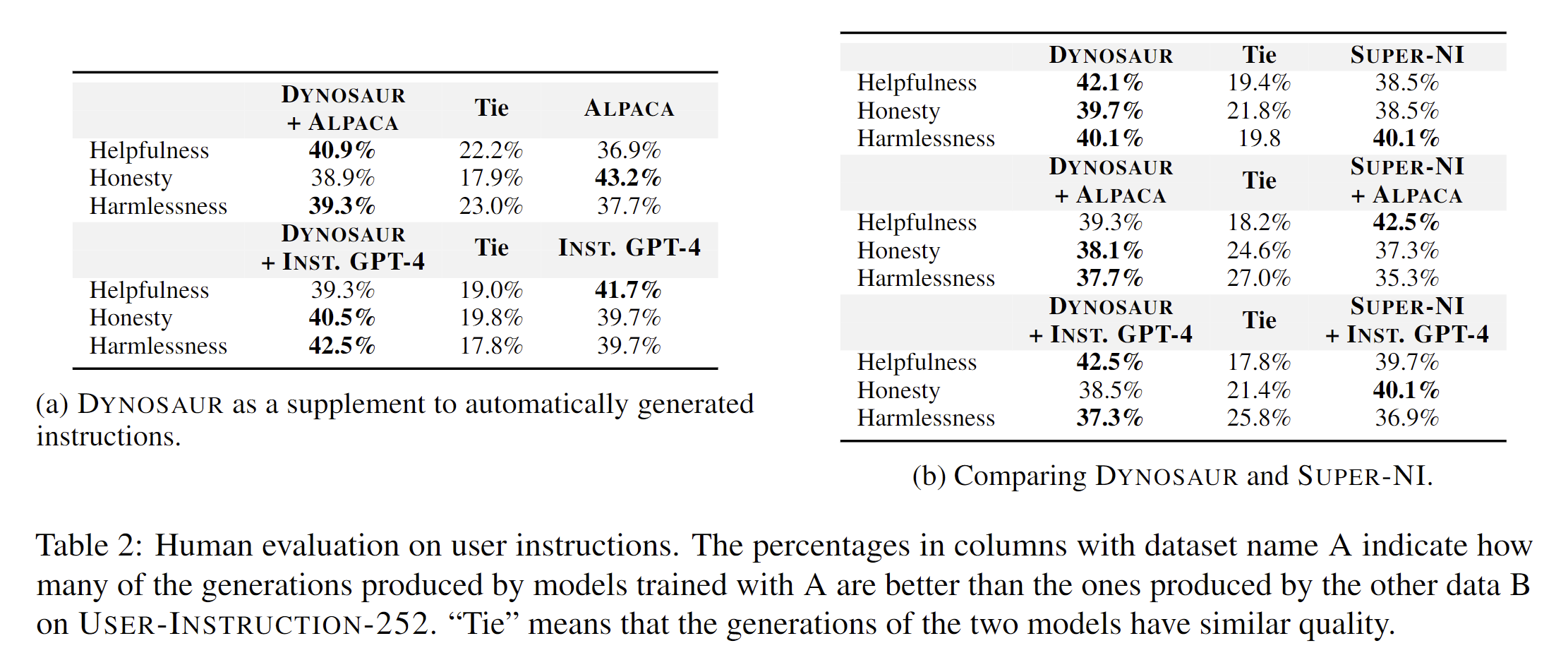

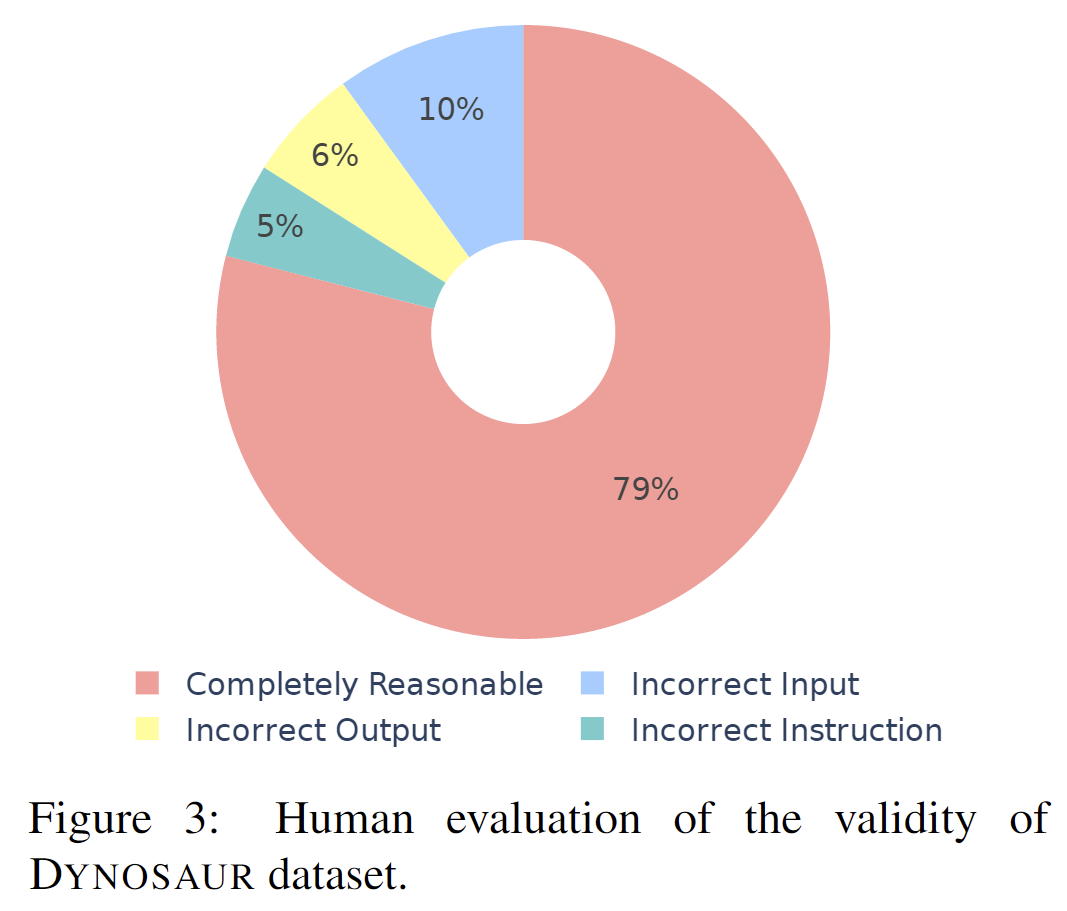

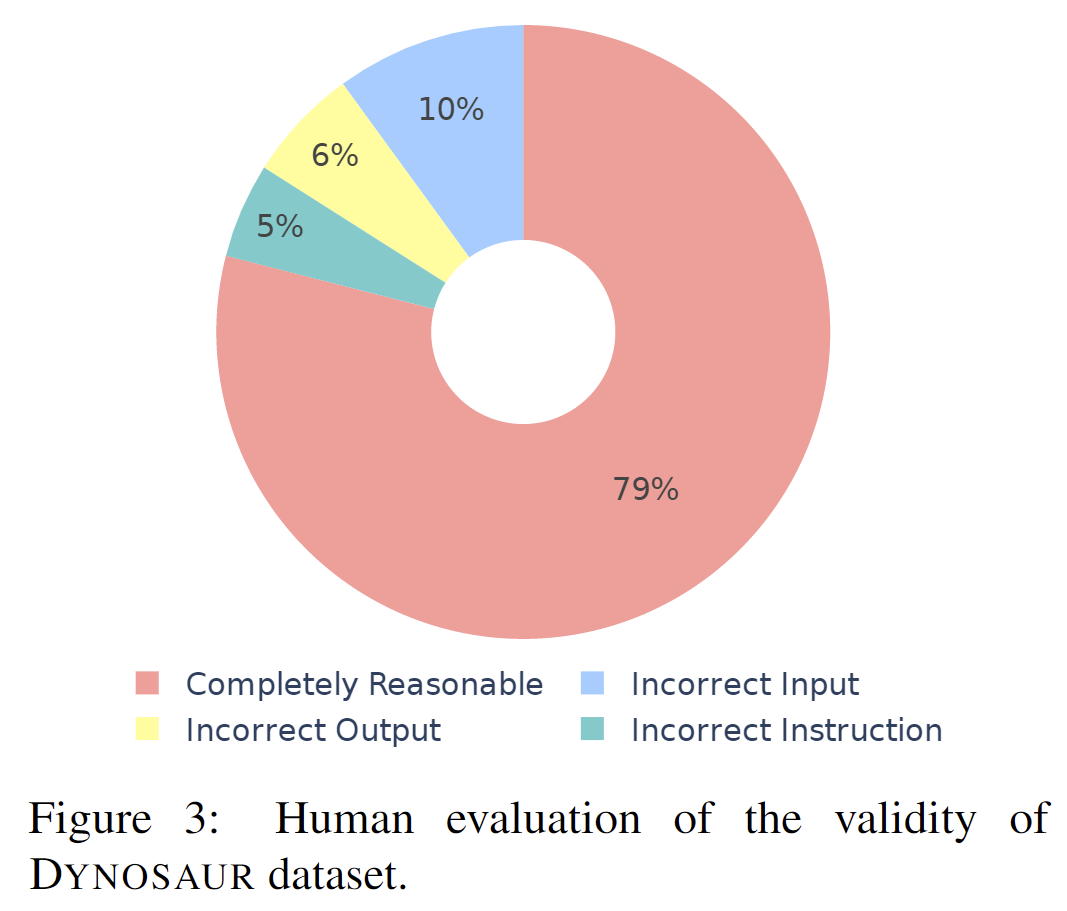

We also recruit human annotators to evaluate (instruction, input, output) pairs. We find that our method is found to be completely correct in 79% of instances, a substantial improvement over the 54% reported in Self-Instruct.

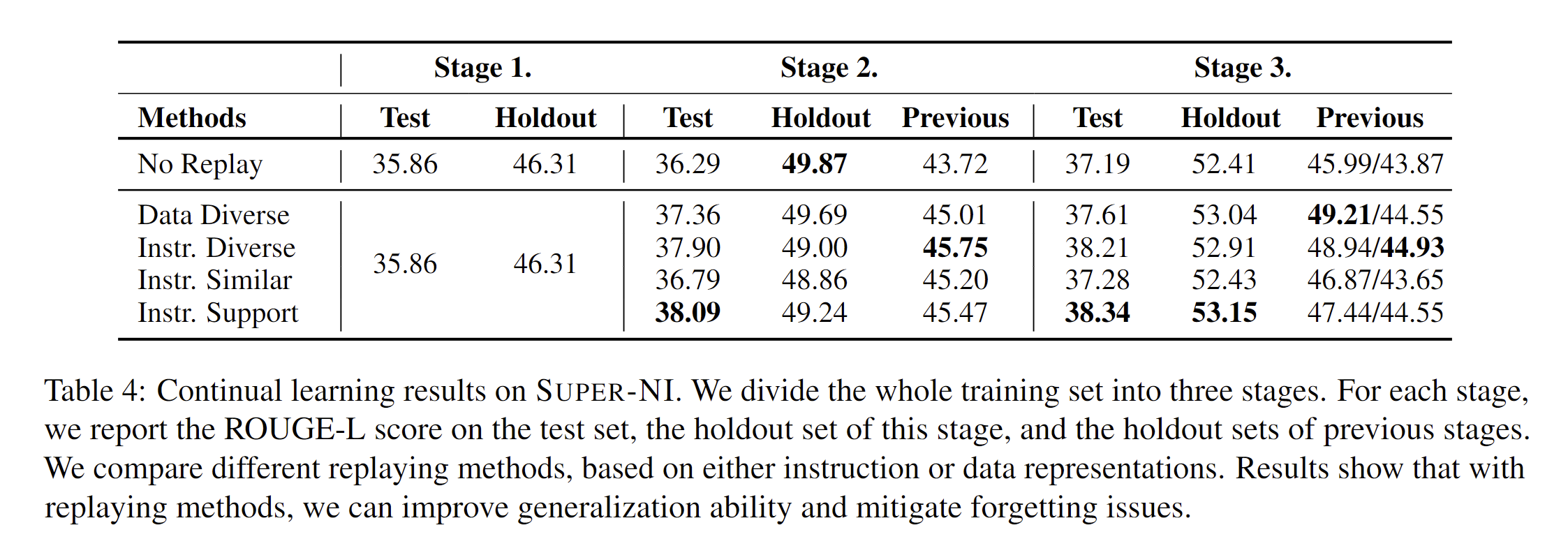

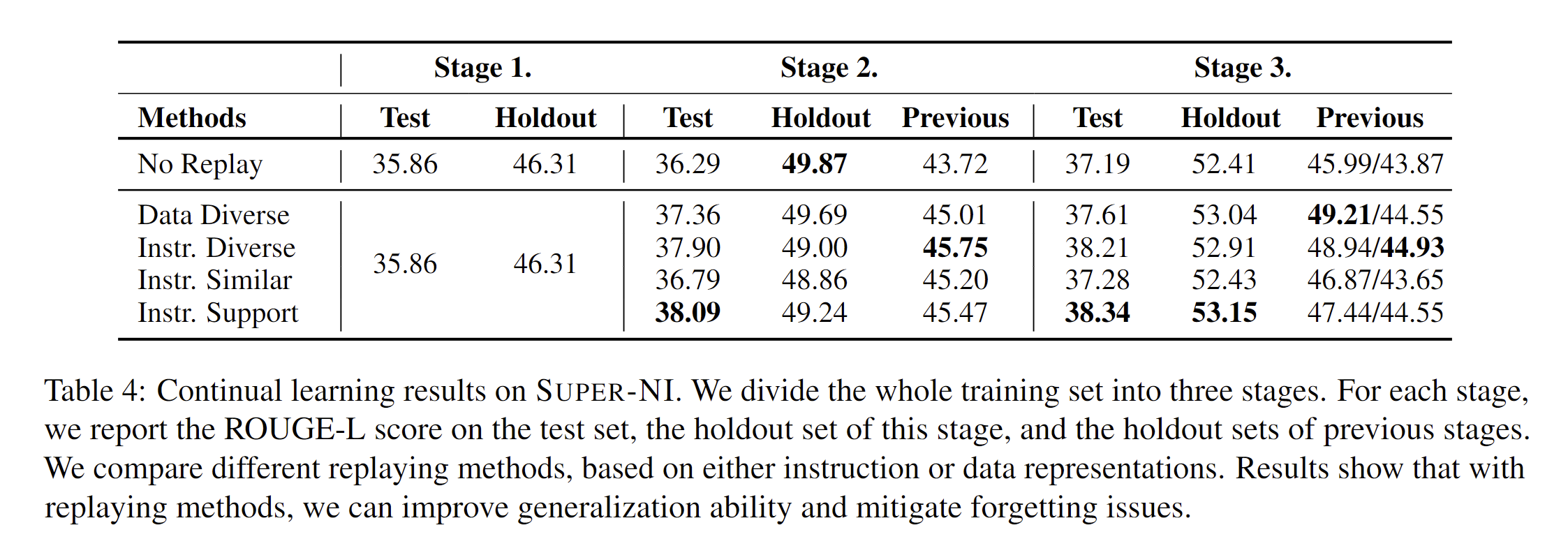

As Dynosaur can expand over time as new tasks come in, an important question is how to adapt a trained instruction-following model to new tasks without suffering from catastrophic forgetting.

Experiments on Super-NI show that replaying is an effective method to improve generalization ability and mitigate forgetting issues. For instruction tuning, we further design to select replay tasks based on instruction representations.

Results show that selecting the most diverse instruction representations can be better than selecting based on data representation diversity.